Intro to

Vector Embeddings

Data Science Dojo & Weaviate

Intro to

Vector Embeddings

Victoria Slocum

Victoria Slocum

Machine Learning Engineer

[1.5, -0.4, 7.2, 19.6, 20.2, 1.7, -0.3, 6.9, 19.1, 21.1]

[1.5, -0.4, 7.2, 19.6, 20.2, 1.7, -0.3, 6.9, 19.1, 21.1]

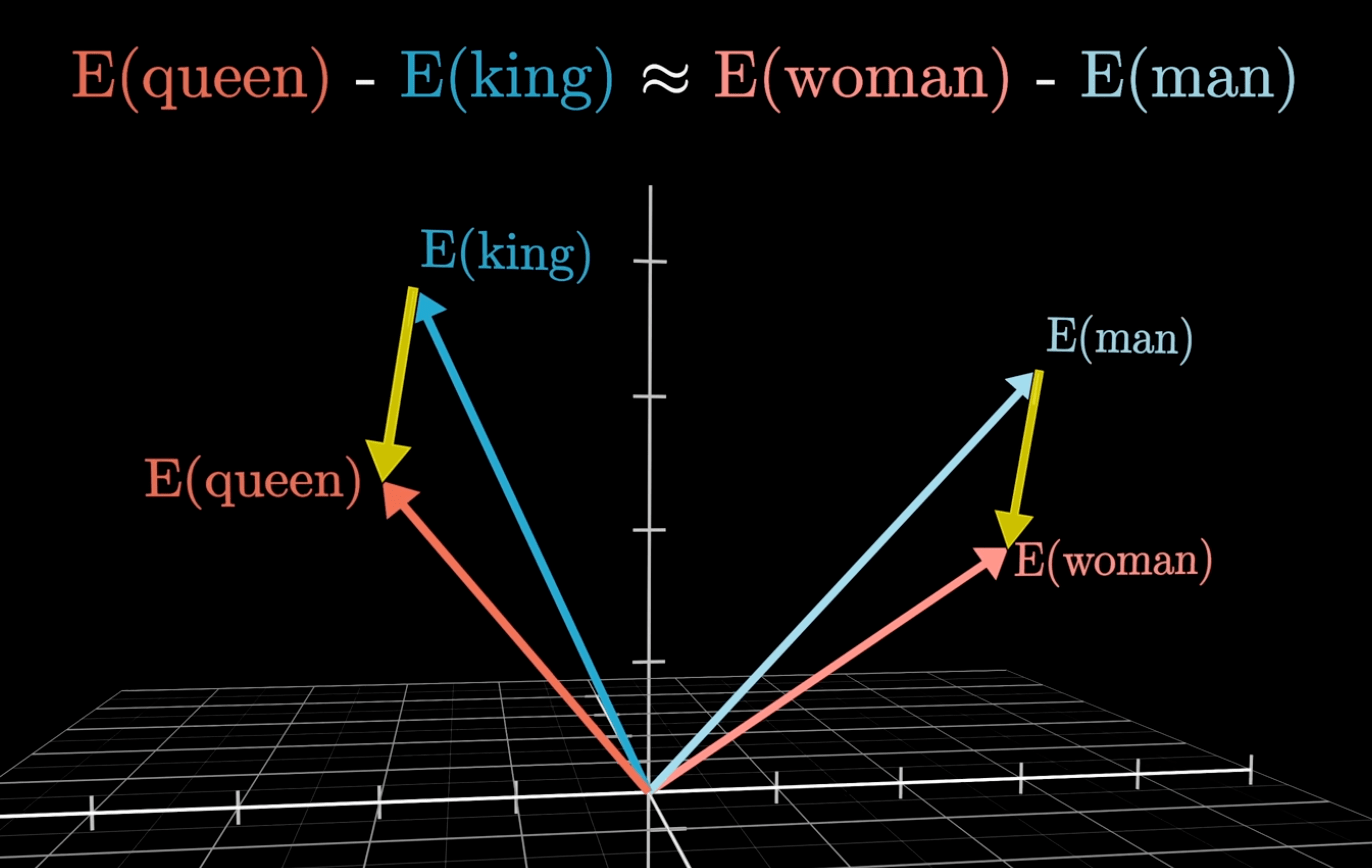

You can do a bunch of cool things with vectors

You can do a bunch of cool things with vectors

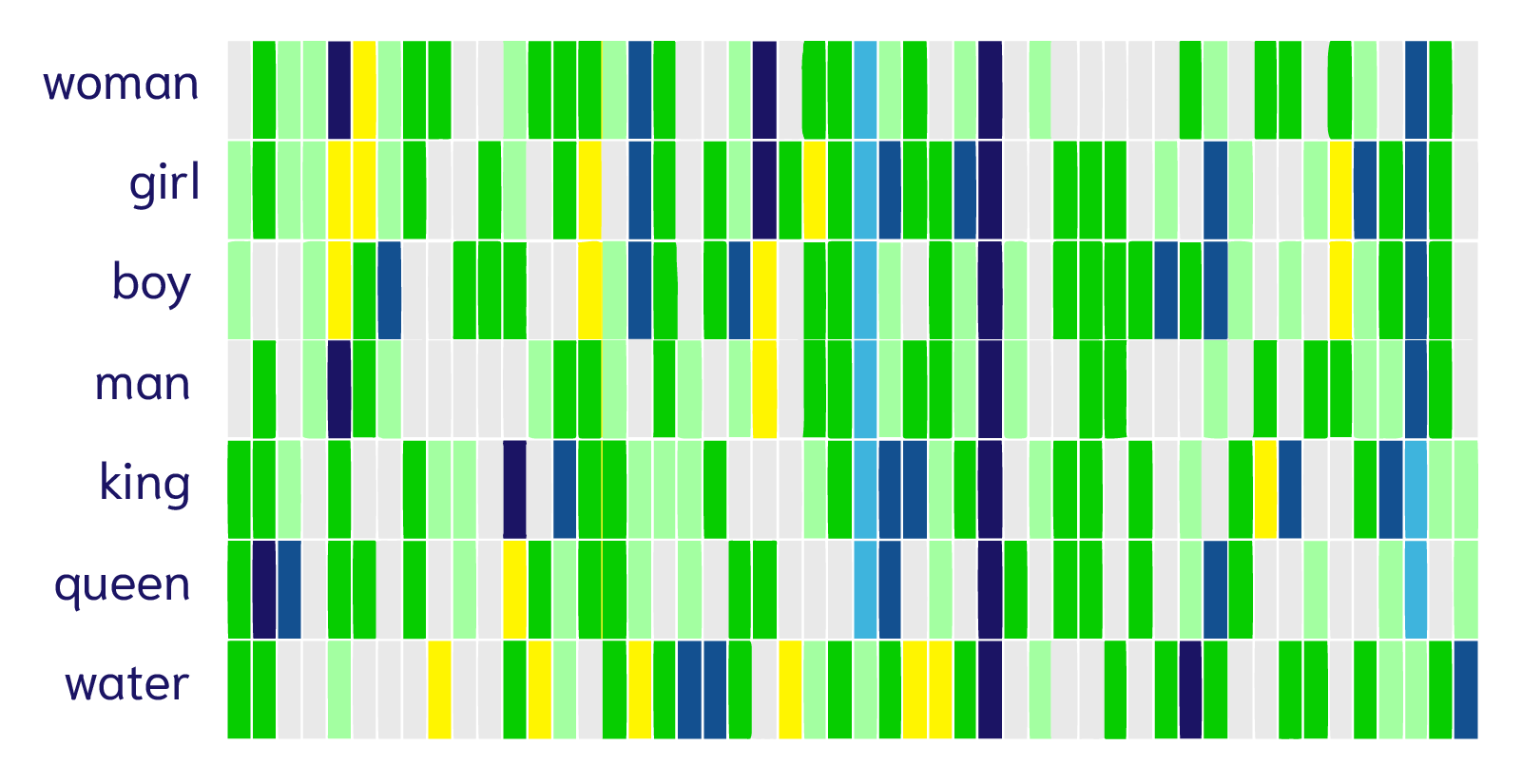

[queen] - [king] = [woman] - [man]

source: @3blue1brown

What does this mean?

The vectors are encoding meaning of the things in some way

[0, 0, 0]

red

green

blue

R

G

B

[255, 255, 255]

red

green

blue

R

G

B

[255, 0, 0]

red

green

blue

R

G

B

[255, 0, 255]

red

green

blue

R

G

B

[80, 200, 120]

red

green

blue

R

G

B

Each number represents how much red, green, or blue is in the color.

This is exactly what a vector embedding is - a sequence of numbers that represents meaning.

[0, 0, 0]

red

green

blue

R

G

B

[1.5, -0.4, 7.2, 19.6, 20.2, 1.7, -0.3, 6.9, 19.1, 21.1, ...]

(1000+ dim)

cat

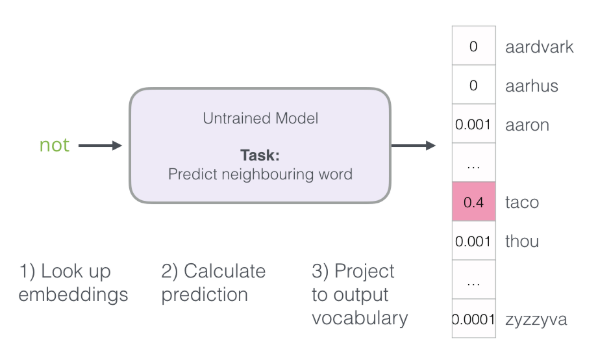

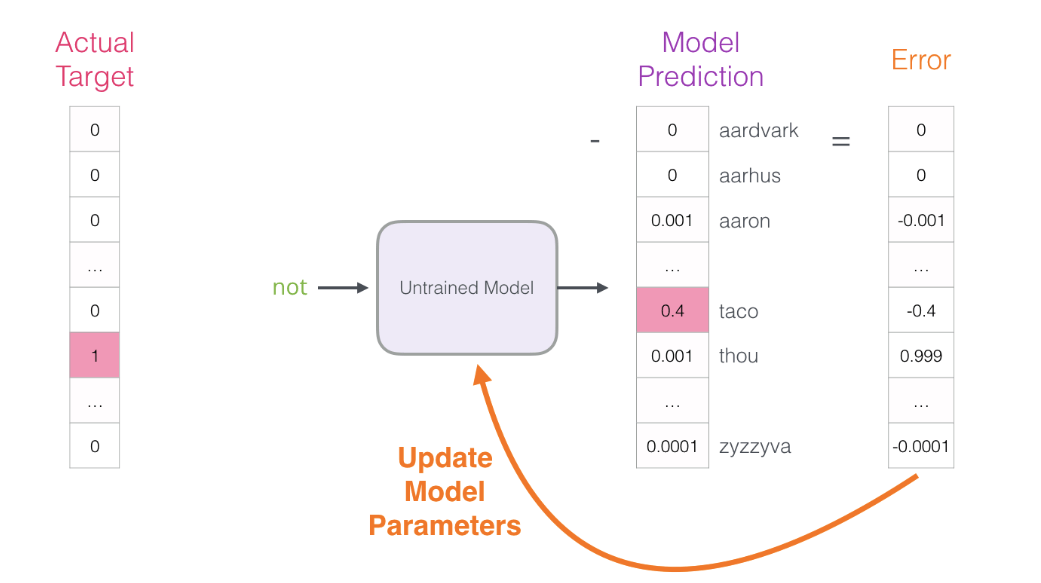

How do embedding models actually work?

How do embedding models actually work?

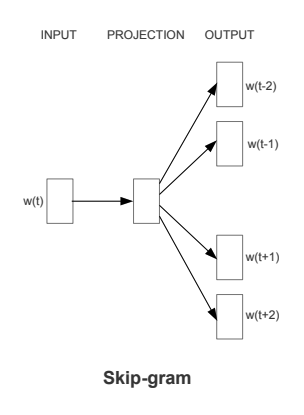

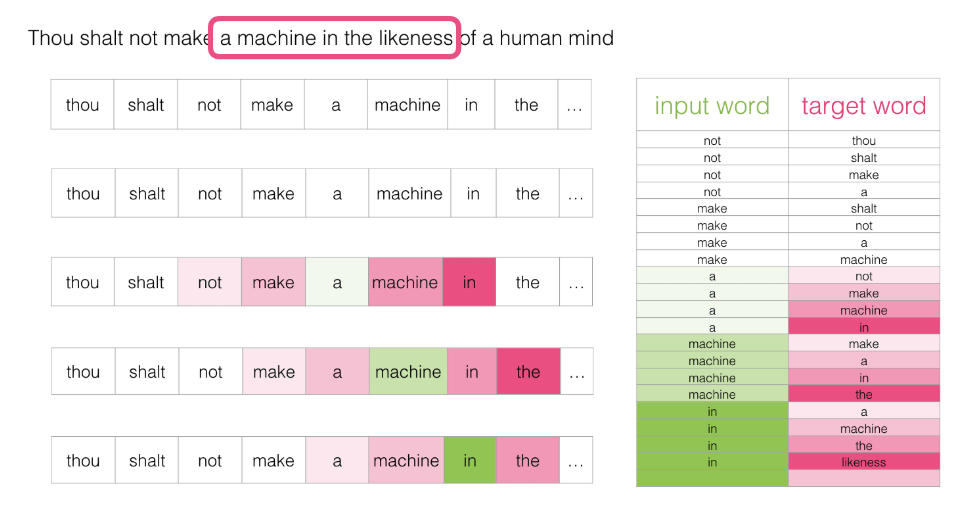

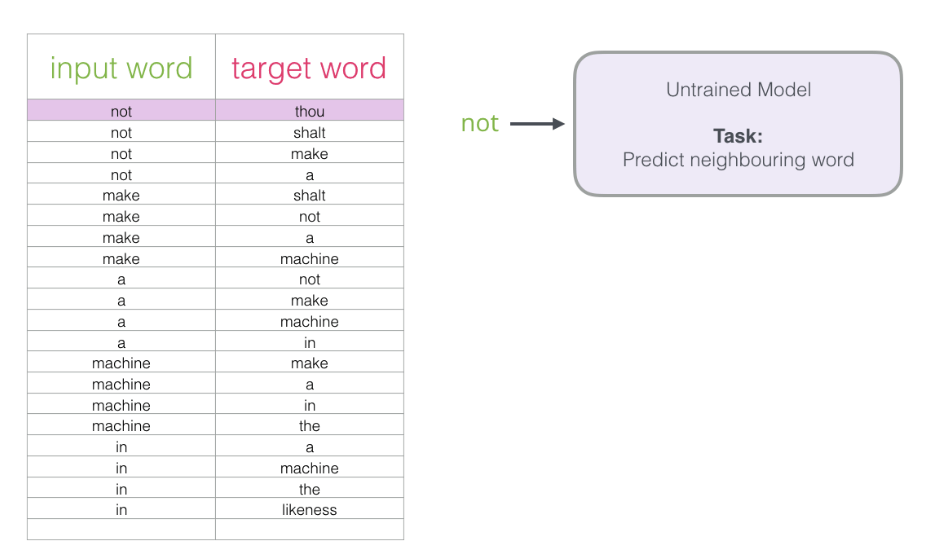

word-level dense vector models

- it doesn’t address words with multiple meanings (polysemantic)

- it doesn’t address words with ambiguous meanings

- it only embeds on the word-level*

Uses a neural network to learn word associations from a large corpus of text

(it was initially trained by Google with 100 billion words)

Limitations

(Word2vec)

word-level dense vector models

(Word2vec)

word-level dense vector models

(Word2vec)

word-level dense vector models

(Word2vec)

word-level dense vector models

(Word2vec)

transformer models

- increased compute and memory requirements

Uses the transformer architecture, taking the entire input text into account to modify each word by the surrounding text.

(the basis of all modern machine learning)

Limitations

(bert, elmo, etc)

transformer models

(bert, elmo, etc)

Demo time!!

DEMO TIME!!

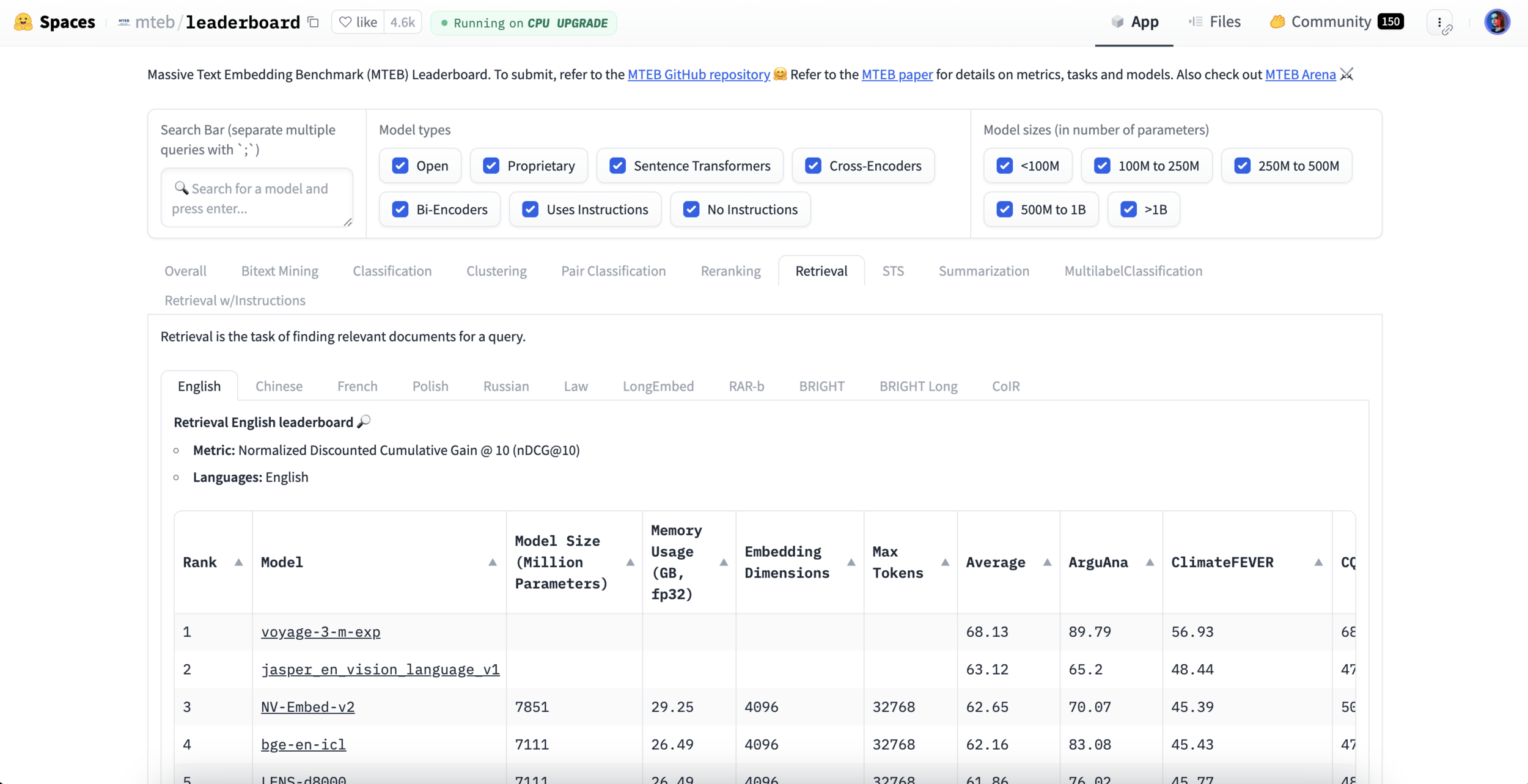

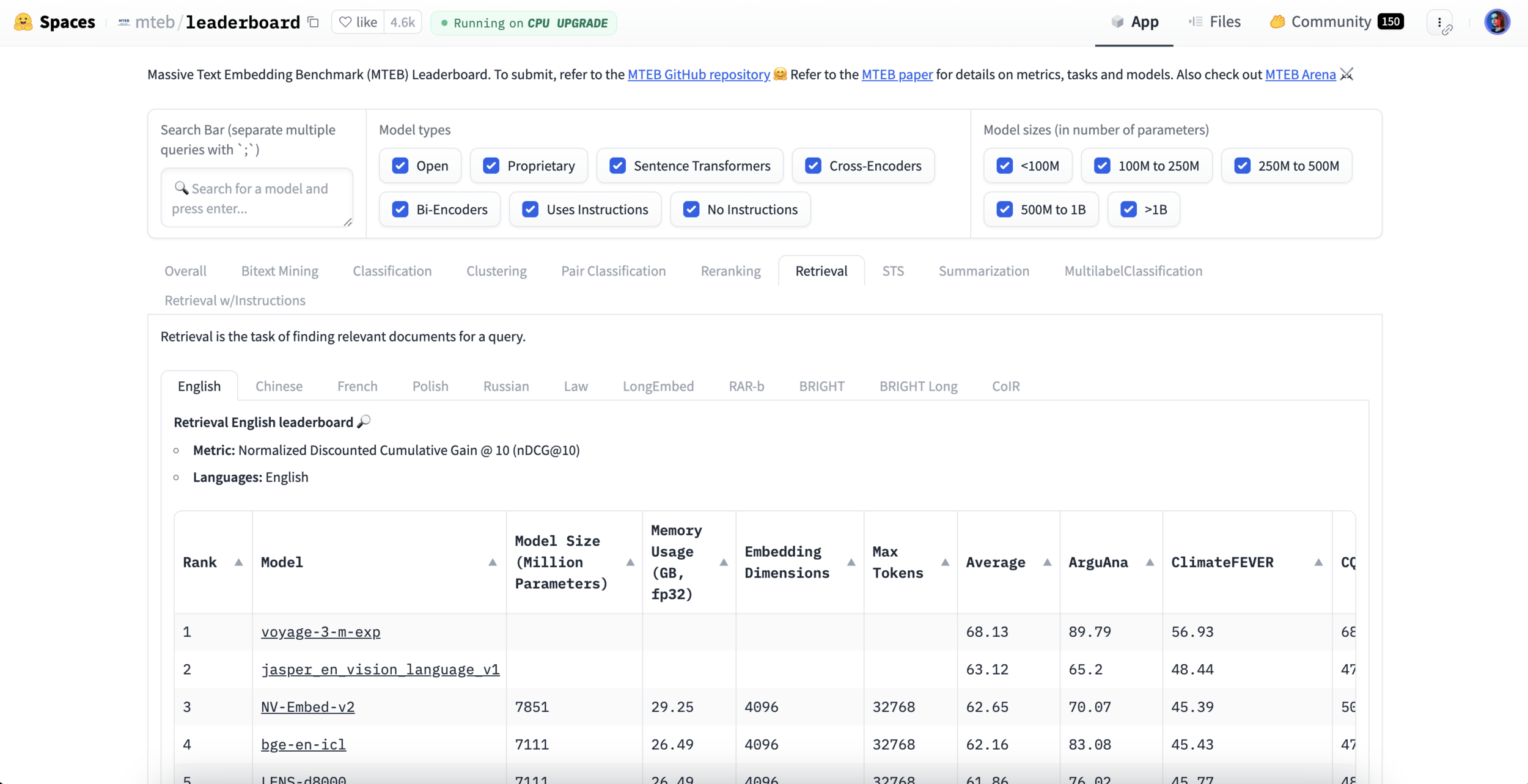

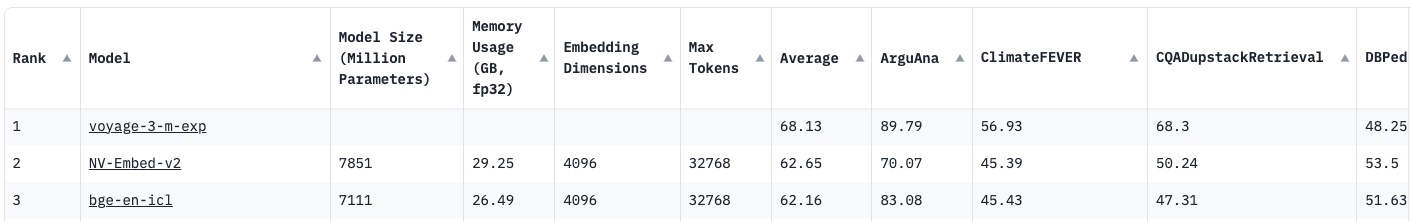

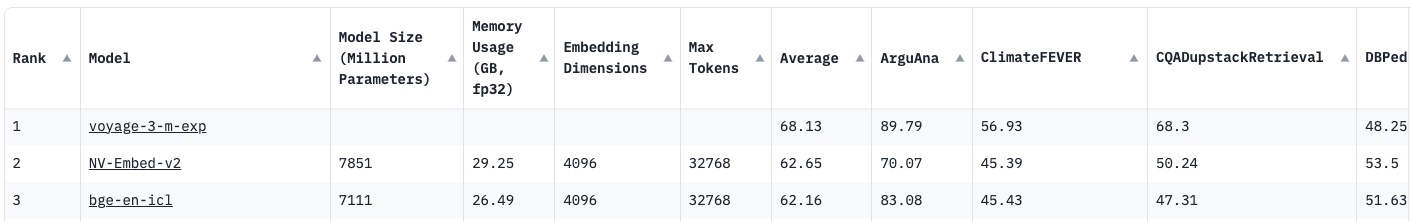

So, how do you actually chose an embedding model?

So, how do you actually chose an embedding model?

Data Performance

Infrastructure considerations

Language Specificity

domain Specificity

comparison/

benchmarking

inference costs

storage costs

latency /

throughput

Data Performance

The MTEB Leaderboard

Influences hardware choices

What is your use case?

Retrieval performance

inference cost

storage cost

multilingual, code, long context, etc

take with a grain of salt

specific domain datasets

Let's talk about cost

Let's talk about cost

Closed-Source Options

accessed through an API

Benefits

Drawbacks

- Speed

- Easy to use and access

- No hosting

- Rate limits

- Batch upload

- Locked-in to the provider

Benefits

Drawbacks

- Control

- Flexibility

- Visibility and open access

- Need to host yourself

- Infrastructure costs

Open-Source Options

hosted locally

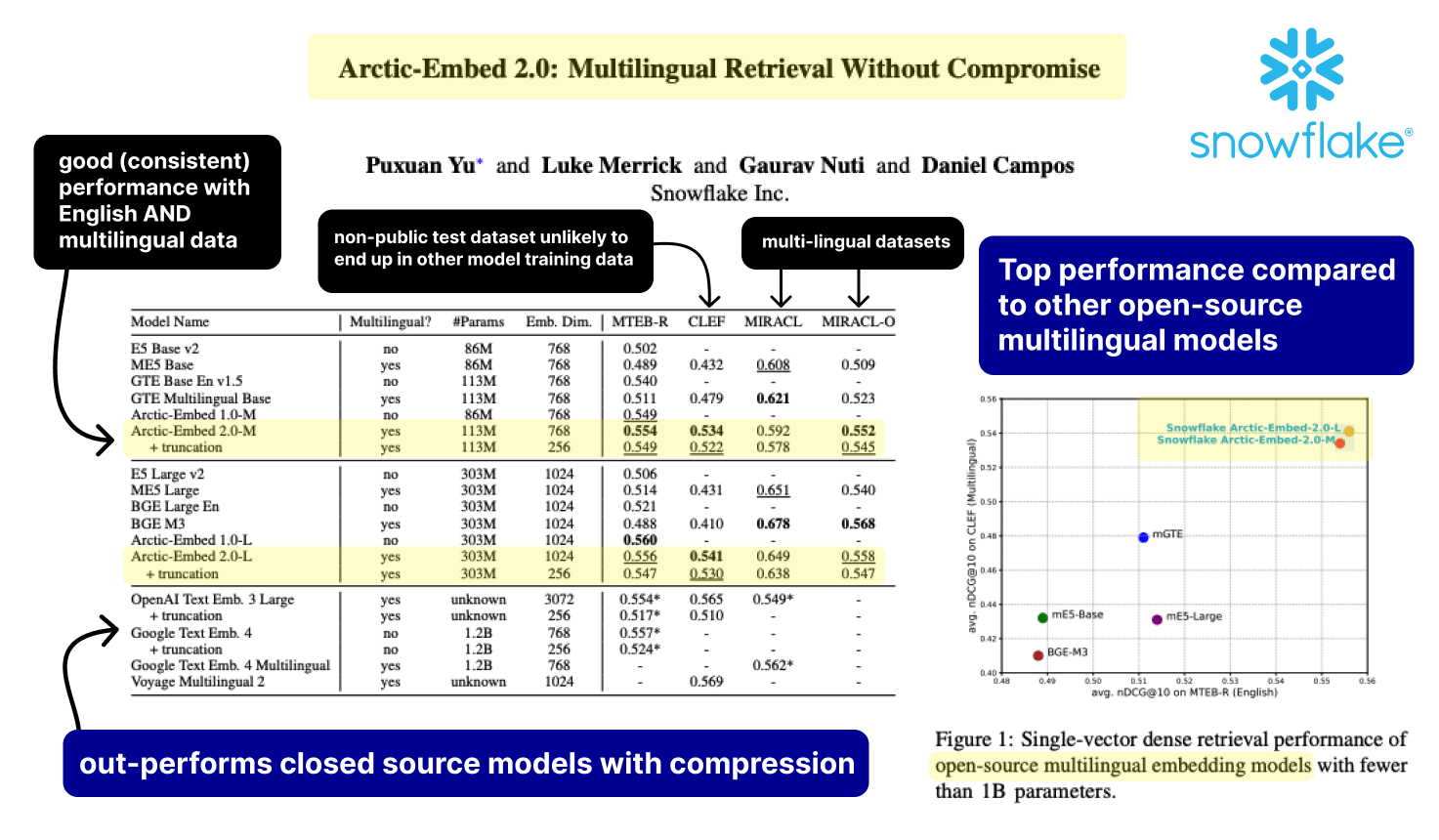

Embedding models case study

snowflake Arctic Embed 2.0

top models on mteb

vs

Embedding models case study

snowflake Arctic Embed 2.0

top models on mteb

vs

snowflake Arctic Embed 2.0

ai isn't free

ai isn't free

(not even close)

next webinar!

with Data Science Dojo

connect with me on LinkedIn!